Edvancer's Knowledge Hub

Data Cleaning – the secret ingredient to the success of any Data Science Project

- Identifying relevant data and removing irrelevant data

- Fix Irregular cardinality and structural errors

- Outliers

- Missing data treatment

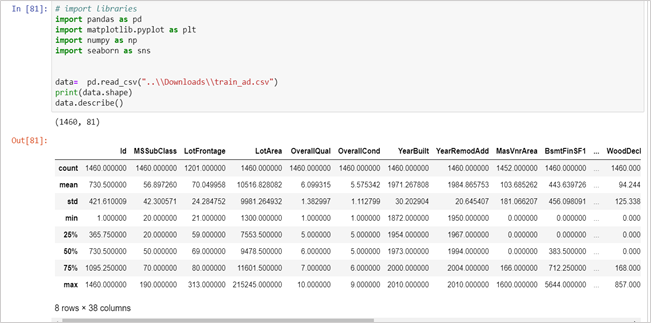

Figure 2: Check for Null Values

Figure 2: Check for Null Values

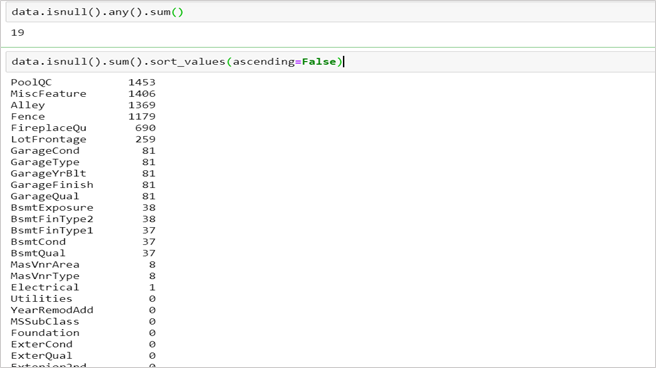

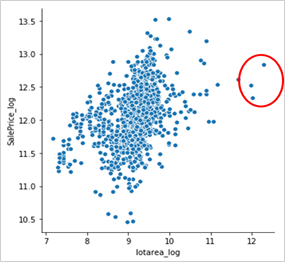

Figure 3: Outliers highlighted in red

Figure 3: Outliers highlighted in red

Identifying relevant data and removing irrelevant data

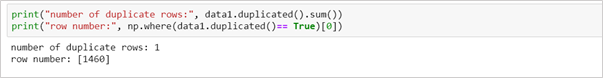

Mainly two checks should be carried out for identifying irrelevant data: 1. Duplicate entries Data is usually collected from many sources and joined together to form one dataset. This might lead to the duplicity of observations. This might not be problematic if observations (small in number) are repeated a few times but could lead to erroneous behaviour if observation(s) are repeated far too many times. Hence, it is better to drop duplicate observations to have a cleaner dataset. 2. Irrelevant observations

The dataset would contain observations which might not be useful for your specific task. For example, if you were analysing the shopping behaviour of women only, you would not require observations for men in your dataset — row-wise.

Similarly, your data might have a column for id or names of employees which would not be very helpful in making predictions and could be dropped — column-wise.

2. Irrelevant observations

The dataset would contain observations which might not be useful for your specific task. For example, if you were analysing the shopping behaviour of women only, you would not require observations for men in your dataset — row-wise.

Similarly, your data might have a column for id or names of employees which would not be very helpful in making predictions and could be dropped — column-wise.

Fix Irregular cardinality and structural errors

- Drop the columns which have a cardinality of 1 (for categorical features), or zero or very low variance (for continuous features). Such features do not provide much information and are not useful for building predictive models.

- Categorical columns could have a high number of classes due to typos or inconsistent capitalization. For example, the gender column might have many classes like male, female, m, f, M, and F, these represent only two levels — Male and Female. Such classes should be mapped to proper levels and the rest of the levels should be dropped. Bar plots could be used to highlight such issues.

- Ensure that the data is represented by the correct data type i.e. numbers are stored as int/float, date as a date object.

Outliers

In the previous blog, we discussed ways to identify outliers. To summarise, data points could be treated as if the data points lie:- above Q3 + 1.5*IQR or below Q1–1.5*IQR, if the data follows a non-Gaussian distribution

- 2 or 3 standard deviations away from the feature mean (z-score), if the data follows a Gaussian distribution

- Visualise univariate variables by plotting Box plots, histograms or scatterplot (as shown in figure 3)

Ways to deal with Outliers

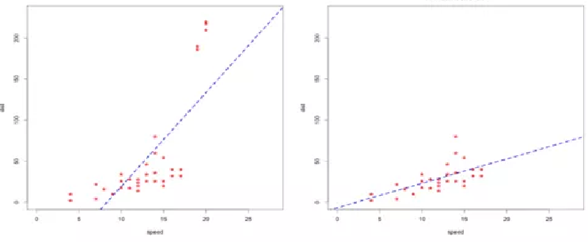

1. To retain or to trim We should not drop any observations without careful consideration, as such information can be valuable to understand the unusual behaviour or anomalies in the data unless we are sure that the outlier may be due to measurement error or if the presence of outliers influences the fit of a model. Figure 4: With Outliers (left pic), the regression line seems to be influenced by the extreme points in the top. Without Outliers (right pic). the regression line better fits the data after dropping the extreme points.

Figure 4: With Outliers (left pic), the regression line seems to be influenced by the extreme points in the top. Without Outliers (right pic). the regression line better fits the data after dropping the extreme points.

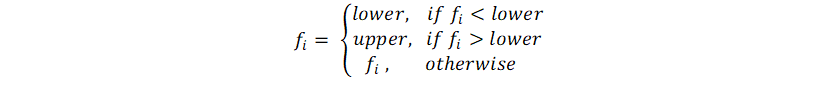

2. Winsorising or Clamp Transformation: Winsorising replaces the outliers with the nearest nonsuspect data. This is also called clamp transformation as we clamp all values above an upper threshold and below a lower threshold to these threshold values, thus capping the values of outliers:

where fi is a specific value of feature f, and lower and upper are the lower and upper thresholds., given by either the IQR method or z-score discussed above.

It is recommended to apply the clamp transformation in cases where it is suspected that a model is performing poorly due to the presence of outliers. The better way to evaluate the impact of winsorising is by comparing the performance of different models trained on datasets where the transformation has been applied and where it has not.

3. Use algorithms robust to outliers

Tree-based algorithms and boosting methods are insensitive to outliers due to the intrinsic nature of the recursive binary splitting approach used to partition the feature space. These algorithms are the best bet when there are outliers in the input features. If there are outliers in the target variable, tree-based algorithms are good but care must be taken to choose the loss function. Reason being that if we use the mean squared error function, then the difference is squared and would highly influence the next tree since boosting attempts to fit the (gradient of the) loss. However, there are more robust error functions that can be used for boosted tree methods like Huber Loss and Mean Absolute Deviation loss. Data Cleaning and Descriptive Statistics and EDA are a very important part of data science life cycle. Understanding the data is crucial to get high-level insights about the population behaviour and it also helps you decide which transformations to apply, if required and which algorithms would give best results for your specific business problem. We will talk about how to treat missing values in the next blog. References: https://www.r-bloggers.com/outlier-detection-and-treatment-with-r/Latest posts by Yogita Kinha (see all)

- A simple guide to Hypothesis Testing - September 24, 2019

- Accelerate your job search with Word cloud in Python - September 22, 2019

- AI revolution in Healthcare industry - September 8, 2019

Follow us on